Researchers from Google Research and UC Berkeley Introduce BoTNet: A Simple Backbone Architecture that Implements Self-Attention Computer Vision Tasks - MarkTechPost

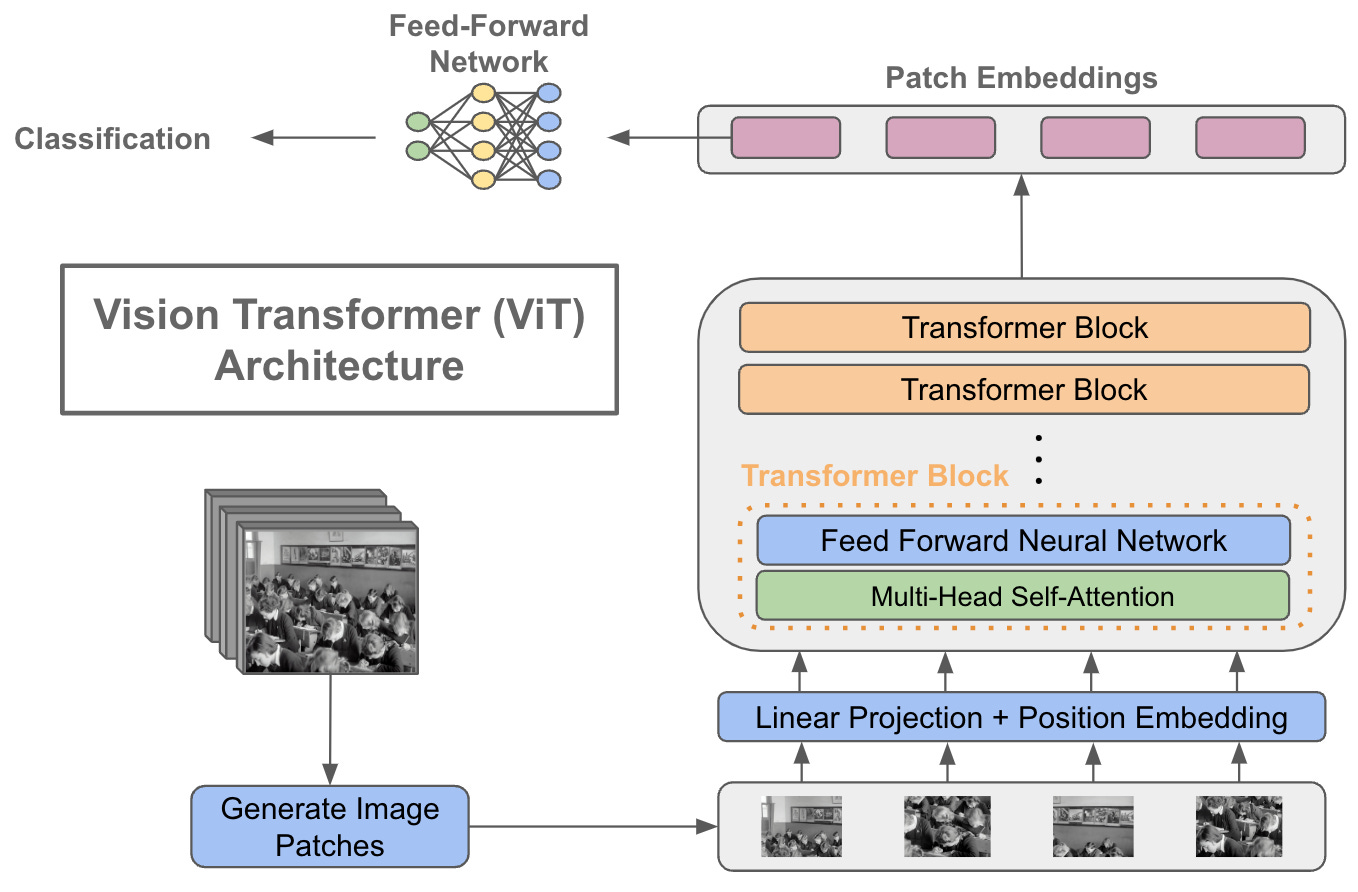

Vision Transformers: Natural Language Processing (NLP) Increases Efficiency and Model Generality | by James Montantes | Becoming Human: Artificial Intelligence Magazine

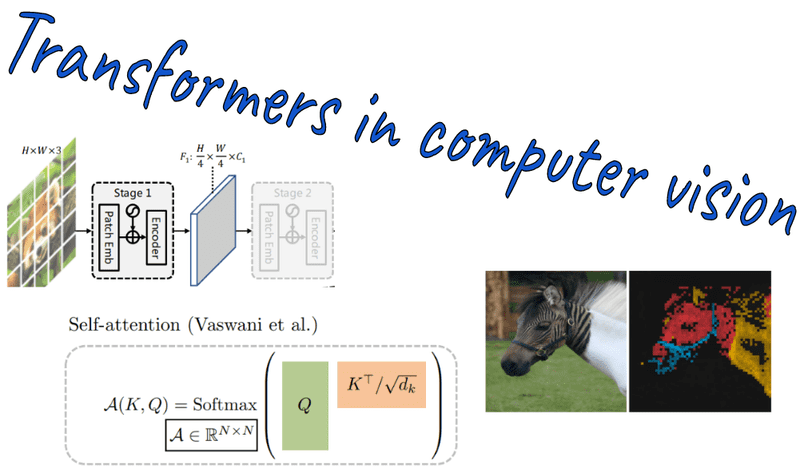

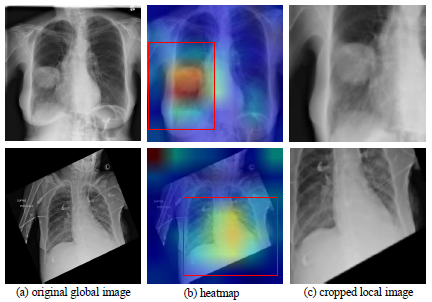

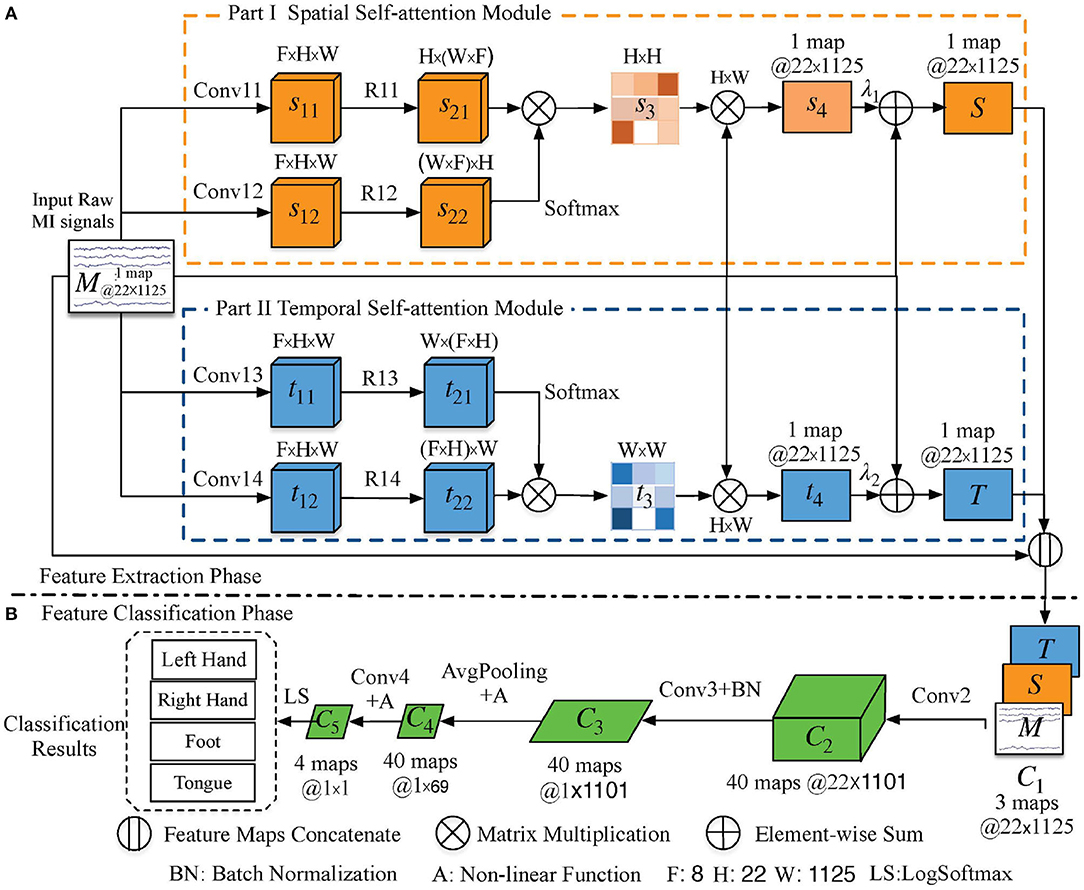

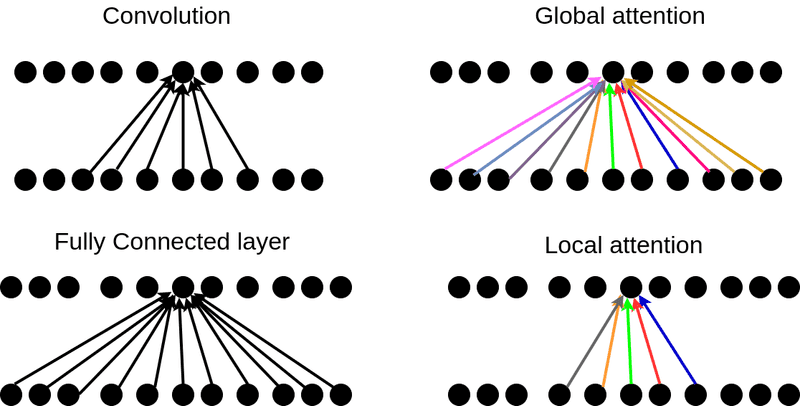

A Survey of Attention Mechanism and Using Self-Attention Model for Computer Vision | by Swati Narkhede | The Startup | Medium

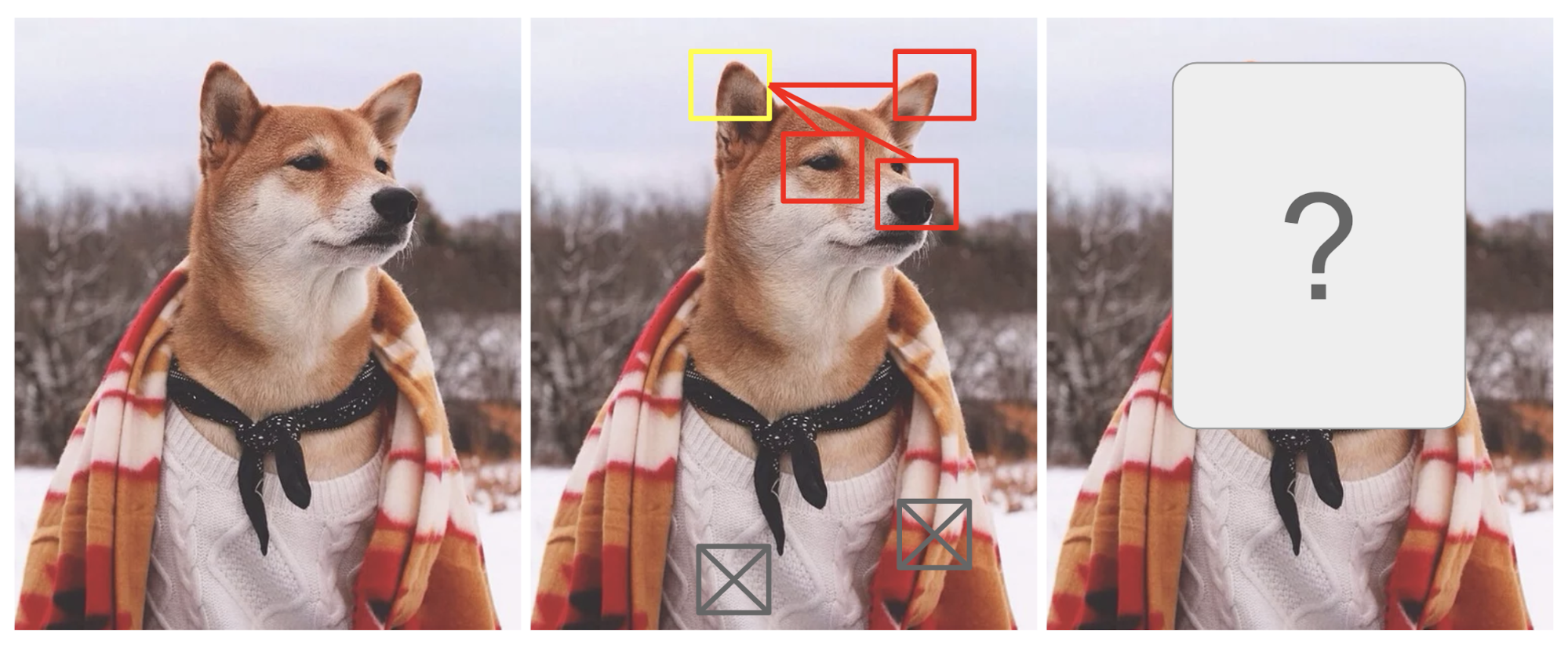

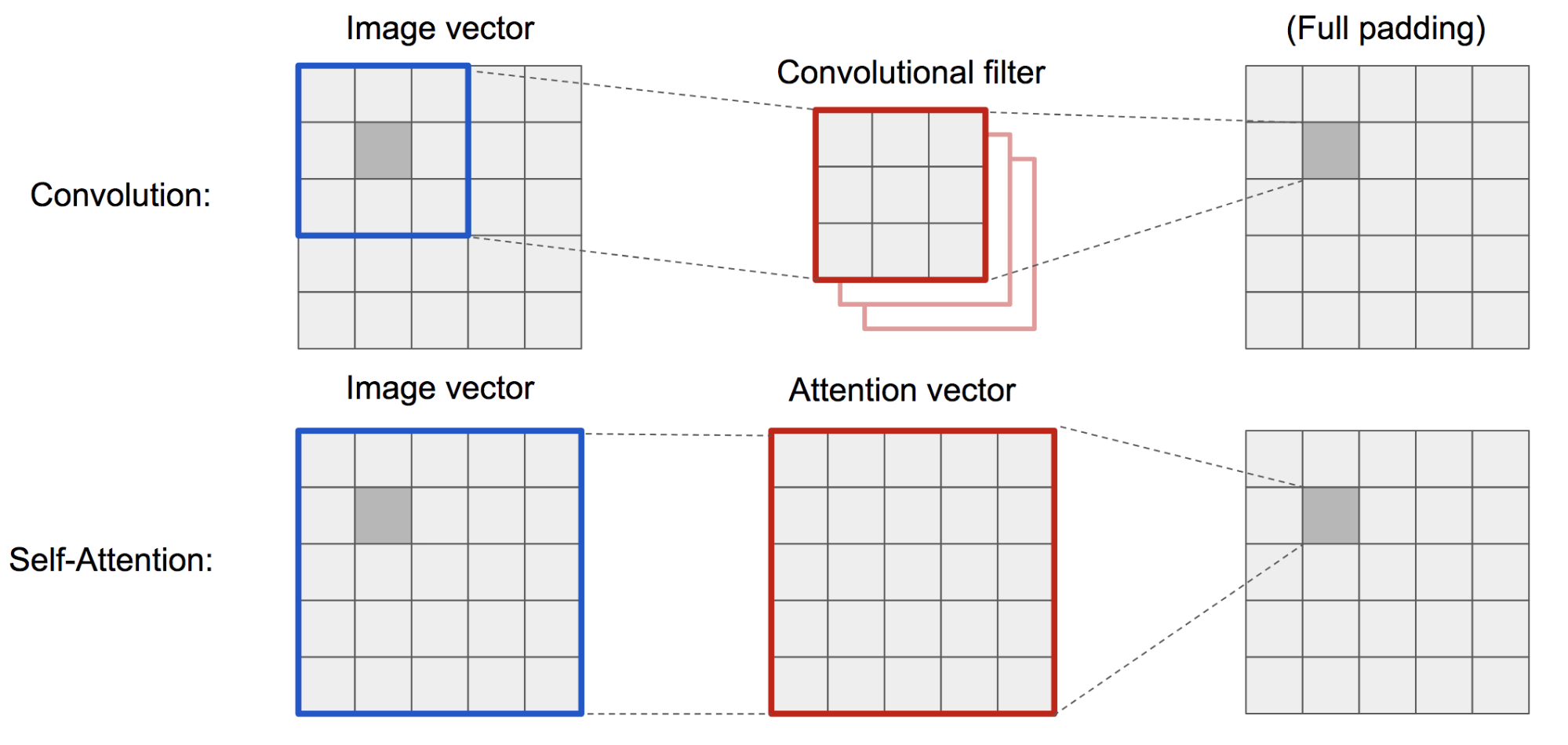

New Study Suggests Self-Attention Layers Could Replace Convolutional Layers on Vision Tasks | Synced

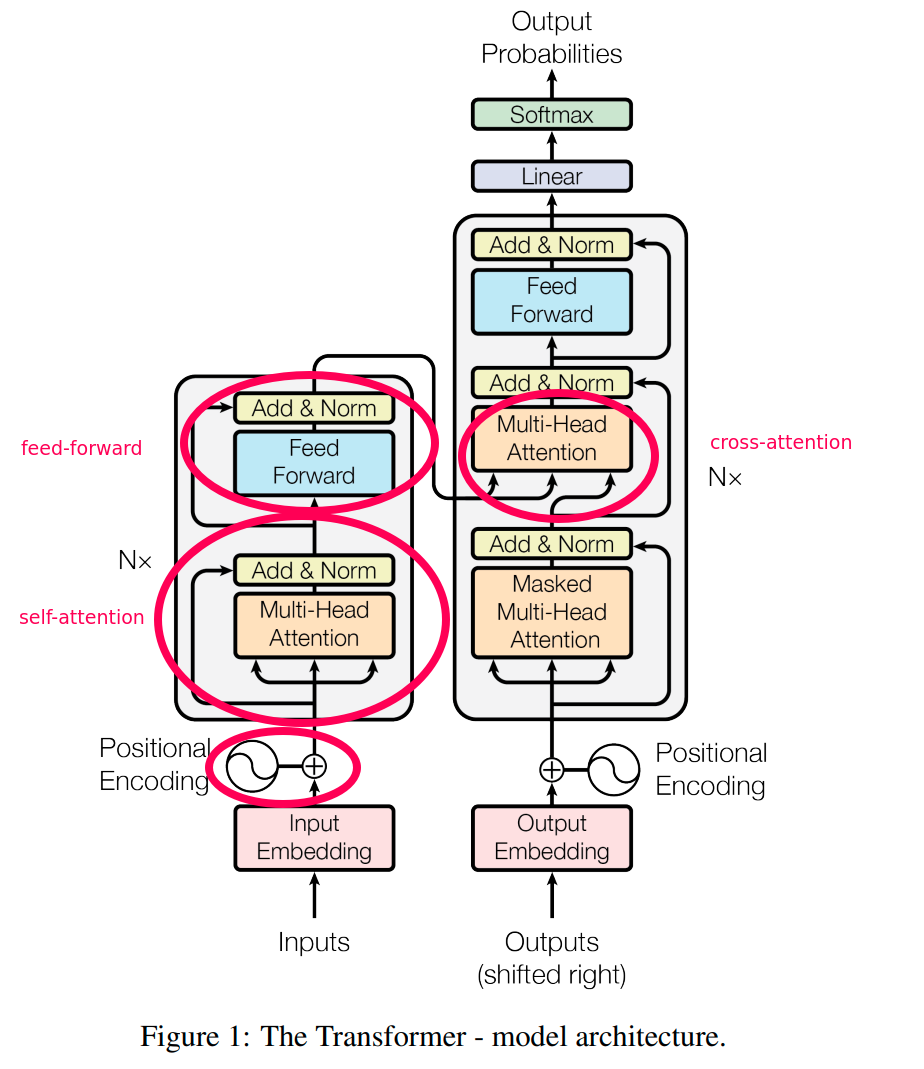

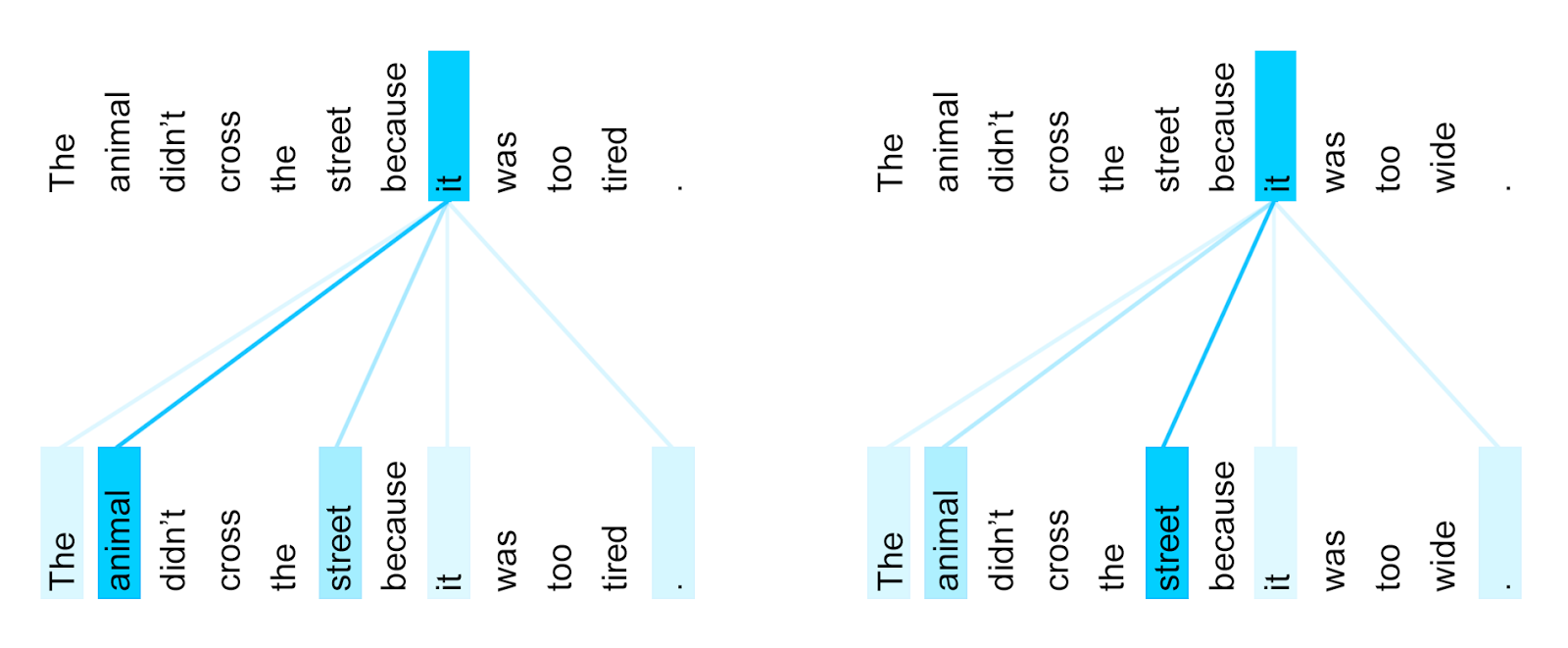

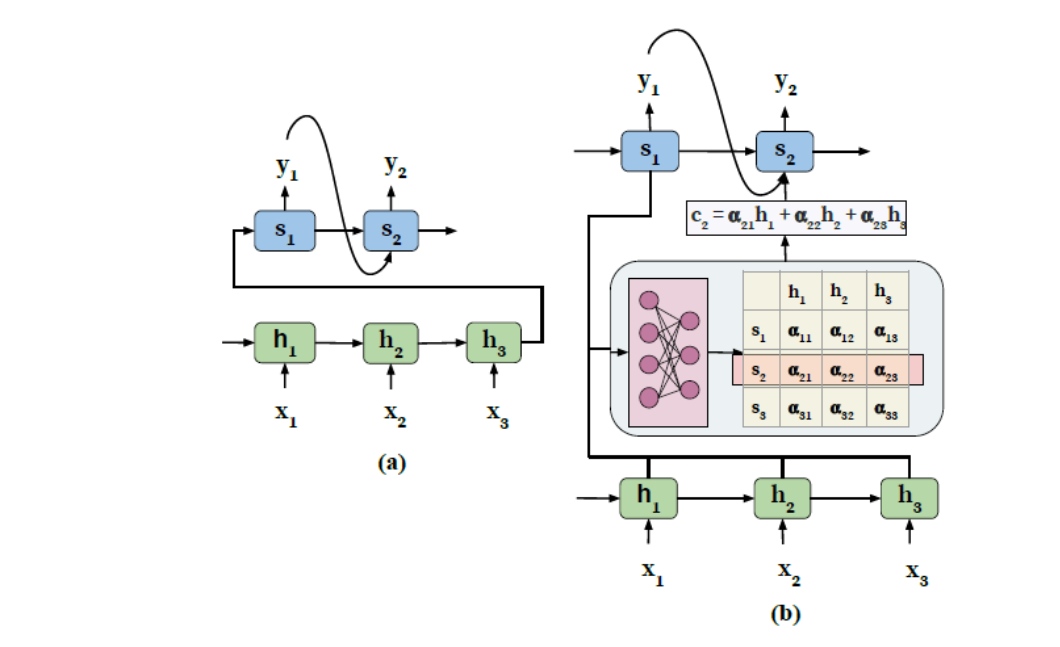

How Attention works in Deep Learning: understanding the attention mechanism in sequence models | AI Summer